We recently worked on a technical SEO project for a large enterprise operating in the online visa processing space. The client’s website had over 2,000 pages and was receiving more than 600K visitors monthly from Google. Despite high traffic, the site was experiencing a range of technical issues that threatened its growth, ranking stability, and user experience.

In this case study, we’ll break down the core issues we identified, how we addressed them, the challenges we faced, and the results we achieved.

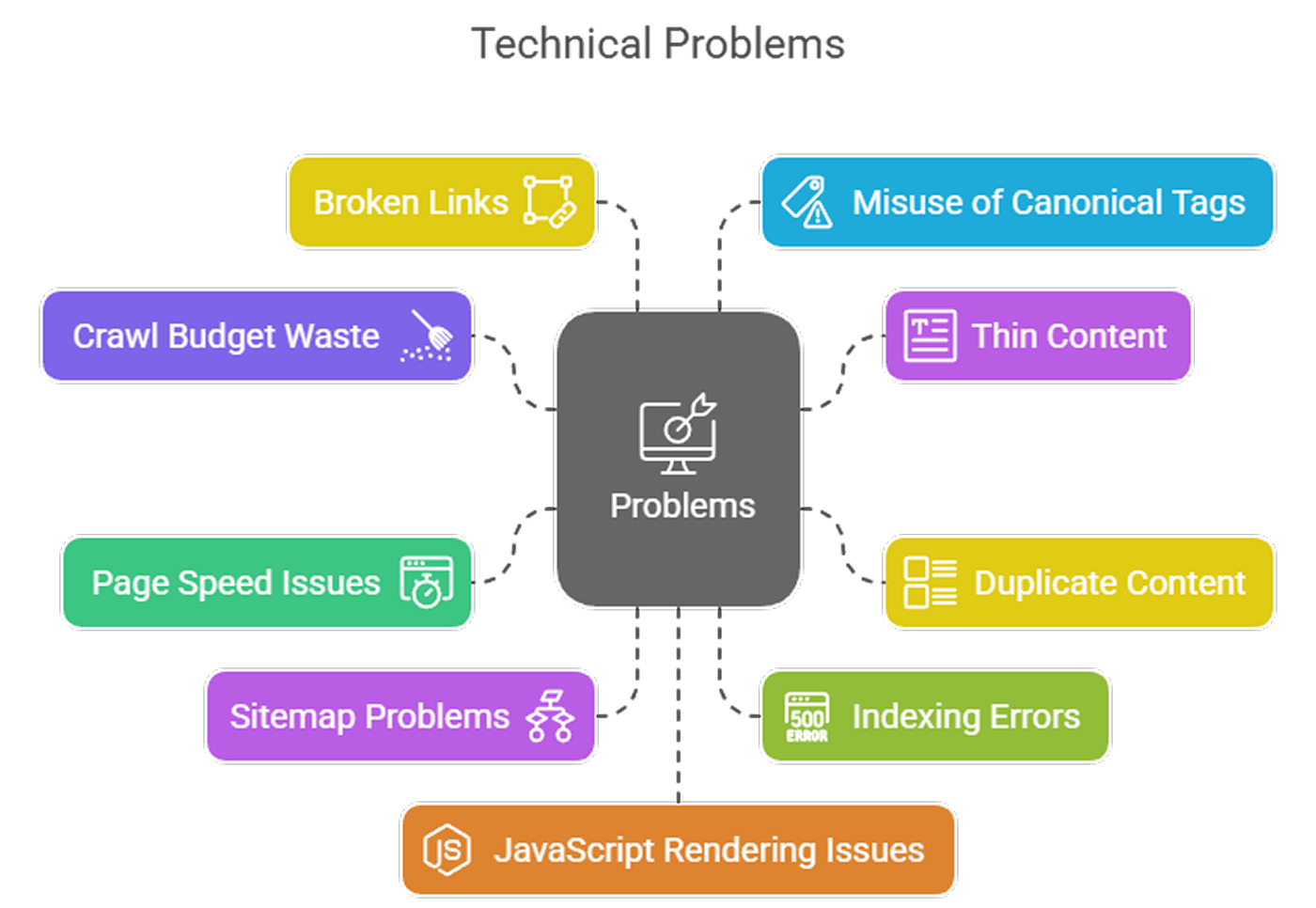

Major Technical SEO Problems We Identified

When dealing with large enterprise websites, especially those in fast-moving industries like travel and visa processing, technical debt often piles up. Through a comprehensive technical audit, we discovered several issues that were significantly hurting crawlability, indexation, and user experience:

Crawl Budget Waste

Search engines were wasting crawl budget on irrelevant or low-value pages, many of which were dynamically generated or outdated. This led to important pages being crawled less frequently.

Thin Content

Several service pages had minimal or redundant content, offering little value to users and search engines. This diluted topical authority and caused ranking stagnation.

Duplicate Content

Language-specific variations and poorly structured URLs had resulted in duplicate content across the site. This confused search engines and affected canonical indexing.

Page Speed Issues

Multiple pages scored poorly on Core Web Vitals due to unoptimized JavaScript, large image files, and excessive DOM size. This hurt user experience and rankings.

Sitemap Problems

The sitemap was outdated and included no-index pages and broken URLs. Several key pages were missing altogether.

Indexing Errors

Many important pages were not indexed due to improper use of meta tags, robots.txt exclusions, and JavaScript rendering delays.

Broken Links

Over time, dozens of internal and external links had become broken due to content removal or URL restructuring.

Misuse of Canonical Tags

Canonical tags were implemented inconsistently, sometimes pointing to unrelated pages or conflicting versions, causing ranking dilution.

JavaScript Rendering Issues

Heavy reliance on JavaScript for content delivery led to crawlability issues. Key information wasn’t being seen by Googlebot, especially on mobile.

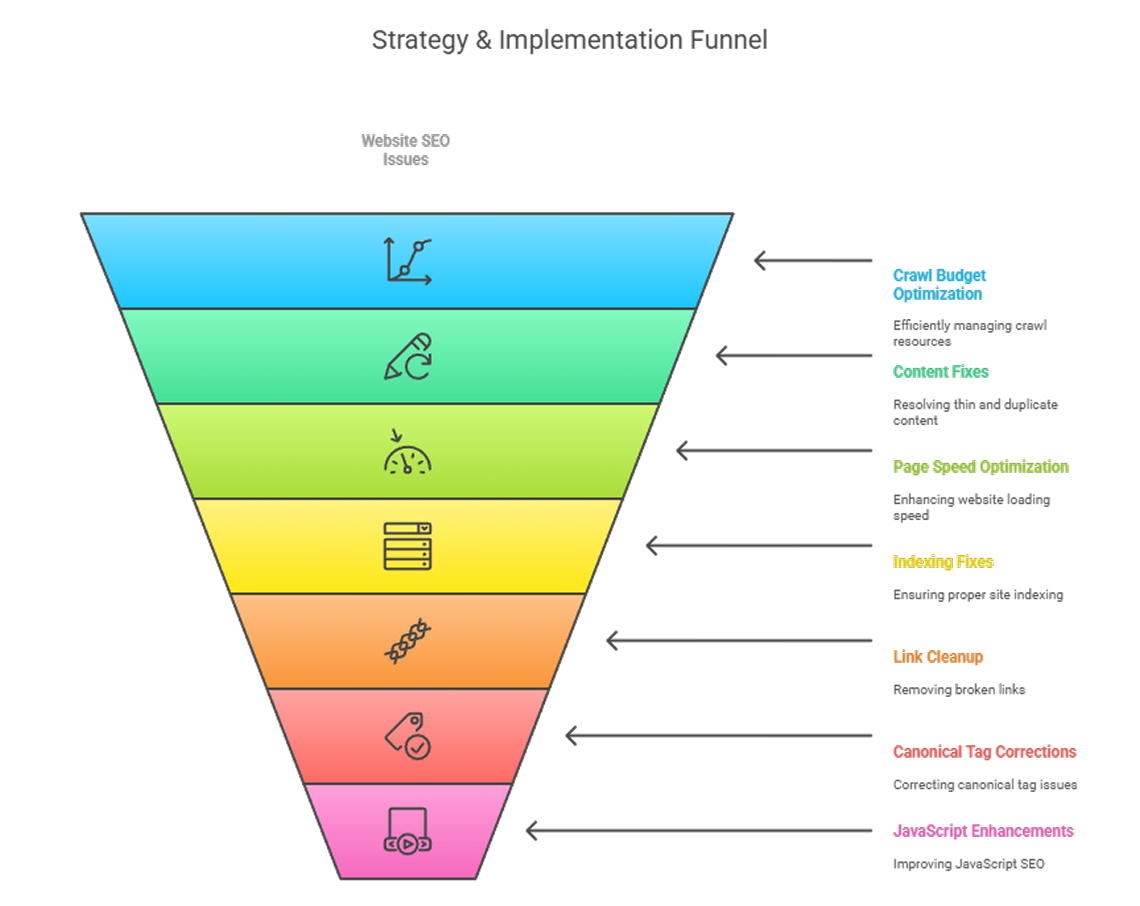

Our Strategy & Implementation Process

After mapping out over 50 technical elements during our audit, we prioritized the most critical ones using a severity vs. impact matrix. Then we executed the solutions in collaboration with the client’s in-house developers:

✅ Crawl Budget Optimization

Blocked low-value and duplicate pages using robots.txt and noindex tags.

Implemented URL pruning for unnecessary parameterized pages.

Structured pagination and faceted navigation using best practices.

✅ Thin & Duplicate Content Fix

Rewrote weak content with in-depth, search-intent-aligned information.

Consolidated duplicate pages and applied proper canonical tags.

Deployed hreflang tags to fix international content duplication.

✅ Page Speed Optimization

Lazy-loaded images and eliminated render-blocking resources.

Minified CSS/JS and implemented efficient caching strategies.

Optimized images using next-gen formats (WebP).

✅ Sitemap & Indexing Fixes

Generated a dynamic XML sitemap that updates daily.

Removed outdated and blocked pages from the sitemap.

Fixed noindex/index conflicts and submitted updated sitemaps in GSC.

✅ Broken Link Cleanup

Used Screaming Frog to crawl the entire site and extract all 404s.

Delivered a report listing broken links, their sources, and replacements.

Implemented 301 redirects where necessary.

✅ Canonical Tag Corrections

Standardized canonical tag logic across all templates.

Removed conflicting tags and ensured self-referencing canonicals on key pages.

✅ JavaScript SEO Enhancements

Worked with the DEV team to implement server-side rendering (SSR) for key templates.

Ensured fallback content for critical elements where SSR wasn’t possible.

Results Achieved

Our work led to significant technical improvements and SEO wins:

✅ 70% improvement in Core Web Vitals scores across key pages

✅ Crawl efficiency increased by 45% (as per GSC crawl stats)

✅ Index coverage increased by 18%, with over 350 valuable pages recovered

✅ Bounce rate dropped by 22% due to better speed and internal linking

✅ Keyword ranking growth across 1,200 tracked keywords

✅ Broken links reduced to under 1% site-wide

✅ Improved crawl-to-index ratio, saving crawl budget for priority URLs

Challenges We Faced

Working on an enterprise-level website comes with its own set of challenges:

Developer Dependency

Due to site architecture and internal policy, all changes had to be executed by the client-side developers. We had to provide highly detailed implementation documents and conduct regular syncs to ensure progress.

JavaScript Limitations

Several key areas of the site were rendered entirely via JS, and switching to SSR was met with pushback due to infrastructure concerns. We had to prioritize partial SSR and fallbacks strategically.

Customized Reporting

The client required detailed, real-time reporting. We built a tailored dashboard using Looker Studio (formerly GDS) connected with GA4, GSC, and BigQuery to track all technical KPIs and share progress transparently.

Conclusion

This project is a great example of how even a high-traffic enterprise site can suffer from deeply rooted technical issues. By implementing structured audits, prioritizing issues, and collaborating closely with the internal team, we were able to significantly enhance the technical SEO health of the client’s website.

If you’re struggling with technical SEO on your enterprise site, don’t wait until rankings drop. Book a free SEO consultation with us today and let’s unlock your site’s full potential.